Team

Christoph Pohl

KIT, H2T

Fabian Reister

KIT, H2T

Fabian Peller-Konrad

KIT, H2T

Prof. Dr.-Ing Tamim Asfour

KIT, H2T

Abstract

Achieving versatile mobile manipulation in human-centered environments relies on efficiently transferring learned tasks and experiences across robots and environments. Our framework, MAkEable, facilitates seamless transfer of capabilities and knowledge across tasks, environments, and robots by integrating an affordance-based task description into the memory-centric cognitive architecture of the ARMAR humanoid robot family. This integration enables the sharing of experiences for transfer learning. By representing actions through affordances, MAkEable provides a unified framework for autonomous manipulation of known and unknown objects in diverse environments. Real-world experiments demonstrate MAkEable’s effectiveness across multiple robots, tasks, and environments, including grasping objects, bimanual manipulation, and skill transfer between humanoid robots.

System overview

MAkEable is embedded into the memory-centric cognitive architecture implemented in ArmarX. Several strategies that implement the five steps of the architecture are connected to the robot’s memory.

Use Cases

Grasping of Known Objects

Grasping of Unknown Objects

Object Placement at Common Places

Bimanual Grasping of Unknown Objects

Learning to Interact with Articulated Objects using Kinesthetic Teaching

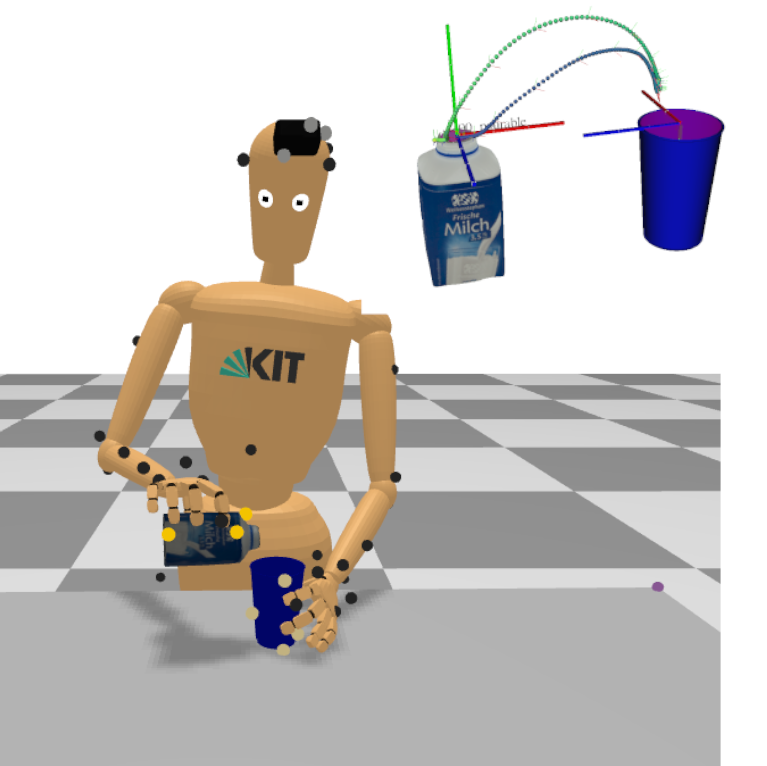

Learning and Transferring Motion of Affordance Frames from Human Demonstration

Experiments

Clearing a Table with Known and Unknown Objects

Memory-enabled Transfer of Drawer-Opening Skill

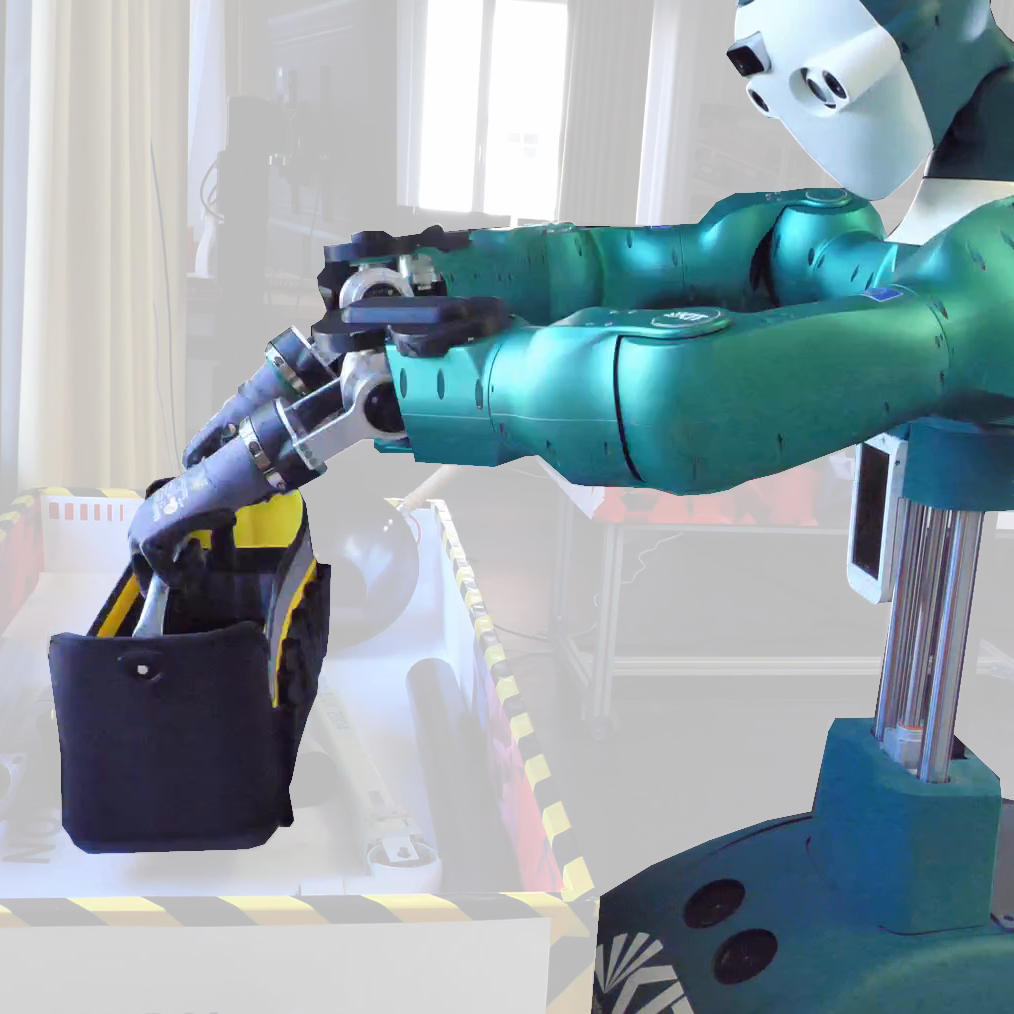

Box Picking through Bimanual Grasping of Unknown Objects

Grasping and Pouring with Non-Humanoid Robot

BibTex

@misc{PohlReister2024,

title={MAkEable: Memory-centered and Affordance-based Task Execution Framework for Transferable Mobile Manipulation Skills},

author={Christoph Pohl and Fabian Reister and Fabian Peller-Konrad and Tamim Asfour},

year={2024},

eprint={2401.16899},

archivePrefix={arXiv},

primaryClass={cs.RO}

}

Acknowledgements

The research leading to these results has received funding from the German Federal Ministry of Education and Research (BMBF) under the competence center ROBDEKON (13N14678), the Carl Zeiss Foundation through the JuBot project, and European Union’s Horizon Europe Framework Programme under grant agreement No 101070596 (euROBIN).